The NoSQL and Spark Ecoystem: A C-Level Guide

New Technologies | New Possibilities

As a C-level executive, it’s becoming clear to me that NoSQL databases and Machine Learning toolsets like Spark are going to play an increasingly big role in data-driven business models, low-latency architecture & rapid application development (projects that can be done in 8-12 weeks not years).

The best practice firms are making this technology shift as decreasing storage costs have led to an explosion of big data. Commodity cluster software, like Hadoop, has made it 10-20x cheaper to store large datasets.

After spending two days at the leading NoSQL provider MongoDB World event in NYC, I was pleasantly surprised to see the amount of innovation and size of user community around document centric databases like MongoDB.

Data Driven Insight Economy

It doesn’t take genius to realize that data driven business models, high volume data feeds, mobile first customer engagement, and cloud are creating new distributed database requirements. Today’s modern online and mobile applications need continuous availability, cost effective scalability and high-speed analytics to deliver an engaging customer experience.

We know instinctively that there is value in all the data being captured in the world around out…no question is no longer “if there is value” but “how to extract that value and apply it to the business to make a difference”.

Legacy relational databases fail to meet the requirements of digital and online applications for the following reasons:

New Tools for New Times – Primer on Big Data, Hadoop and “In-memory” Data Clouds

Data growth curve: Terabytes -> Petabytes -> Exabytes -> Zettabytes -> Yottabytes -> Brontobytes -> Geopbytes. It is getting more interesting.

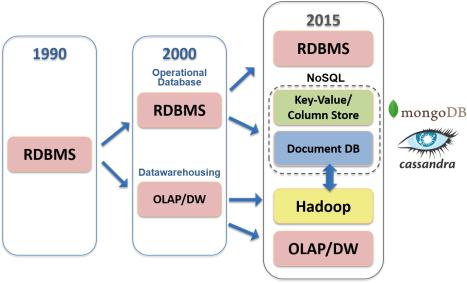

Analytical Infrastructure curve: Databases -> Datamarts -> Operational Data Stores (ODS) -> Enterprise Data Warehouses -> Data Appliances -> In-Memory Appliances -> NoSQL Databases -> Hadoop Clusters

———————

In most enterprises, whether it’s a public or private enterprise, there is typically a mountain of data, structured and unstructured data, that contains potential insights about how to serve their customers better, how to engage with customers better and make the processes run more efficiently. Consider this:

In most enterprises, whether it’s a public or private enterprise, there is typically a mountain of data, structured and unstructured data, that contains potential insights about how to serve their customers better, how to engage with customers better and make the processes run more efficiently. Consider this:

- Online firms–including Facebook, Visa, Zynga–use Big Data technologies like Hadoop to analyze massive amounts of business transactions, machine generated and application data.

- Wall street investment banks, hedge funds, algorithmic and low latency traders are leveraging data appliances such as EMC Greenplum hardware with Hadoop software to do advanced analytics in a “massively scalable” architecture

- Retailers use HP Vertica or Cloudera analyze massive amounts of data simply, quickly and reliably, resulting in “just-in-time” business intelligence.

- New public and private “data cloud” software startups capable of handling petascale problems are emerging to create a new category – Cloudera, Hortonworks, Northscale, Splunk, Palantir, Factual, Datameer, Aster Data, TellApart.

Data is seen as a resource that can be extracted and refined and turned into something powerful. It takes a certain amount of computing power to analyze the data and pull out and use those insights. That where the new tools like Hadoop, NoSQL, In-memory analytics and other enablers come in.

What business problems are being targeted?

Why are some companies in retail, insurance, financial services and healthcare racing to position themselves in Big Data, in-memory data clouds while others don’t seem to care?

World-class companies are targeting a new set of business problems that were hard to solve before – Modeling true risk, customer churn analysis, flexible supply chains, loyalty pricing, recommendation engines, ad targeting, precision targeting, PoS transaction analysis, threat analysis, trade surveillance, search quality fine tuning, and mashups such as location + ad targeting.

To address these petascale problems an elastic/adaptive infrastructure for data warehousing and analytics capable of three things is converging:

- ability to analyze transactional, structured and unstructured data on a single platform

- low-latency in-memory or Solid State Devices (SSD) for super high volume web and real-time apps

- Scale out with low cost commodity hardware; distribute processing and workloads

As a result, a new BI and Analytics framework is emerging to support public and private cloud deployments.